#chatgpt replace developers

Explore tagged Tumblr posts

Text

Enhancing Conversational AI: Insights and Innovations from ChatGPT Developers

Dive into the world of conversational AI with the ChatGPT Developers. Discover the newest developments, industry best practices, and creative fixes for smooth, user-friendly chat experiences. Whether you're a novice or an experienced developer, get insightful knowledge that can improve your chat programming tasks.. Join the ChatGPT Developers community and transform the way you build and interact with AI-driven chatbots. For more information visit: https://chatgpt-developers.com/

#Chatgpt Developers#Chatgpt developer#chat gpt will replace programmers#chat gpt programmers#chatgpt replace developers#developers of chatgpt

0 notes

Text

Opened Reddit by accident while trying to type in a bands youtube channel and saw this:

https://www.reddit.com/r/ChatGPT/comments/1guhsm4/well_this_is_it_boys_i_was_just_informed_from_my/

Well this is it boys. I was just informed from my boss and HR that my entire profession is being automated away. For context I work production in local news. Recently there’s been developments in AI driven systems that can do 100% of the production side of things which is, direct, audio operate, and graphic operate -all of those jobs are all now gone in one swoop. This has apparently been developed by the company Q ai. For the last decade I’ve worked in local news and have garnered skills I thought I would be able to take with me until my retirement, now at almost 30 years old, all of those job opportunities for me are gone in an instant. The only person that’s keeping their job is my manager, who will overlook the system and do maintenance if needed. That’s 20 jobs lost and 0 gained for our station. We were informed we are going to be the first station to implement this under our company. This means that as of now our entire production staff in our news station is being let go. Once the system is implemented and running smoothly then this system is going to be implemented nationwide (effectively eliminating tens of thousands of jobs.) There are going to be 0 new jobs built off of this AI platform. There are people I work with in their 50’s, single, no college education, no family, and no other place to land a job once this kicks in. I have no idea what’s going to happen to them. This is it guys. This is what our future with AI looks like. This isn’t creating any new jobs this is knocking out entire industry level jobs without replacing them.

Don't ever let any AI-supporters try to claim that AI will 'never make people lose their jobs!' because it's already been happening before this, and now this thread is full of stories of people talking about people being laid off from jobs they'd worked for 40 years to be replaced by AI :/

*this* is why it is so important to not tolerate generative AI, even when it's "just a joke" or "for a meme" I'm just eternally greatful that Tumblr hasn't been completely swarmed with AI generated images the way facebook has...

152 notes

·

View notes

Text

Hasbro's CEO is, once again, expressing interest in using AI at WOTC

Not surprising, but I think his own chit-chat about it (directed at shareholders, of course) is quite the read (derogatory):

"Inside of development, we've already been using AI. It's mostly machine-learning-based AI or proprietary AI as opposed to a ChatGPT approach. We will deploy it significantly and liberally internally as both a knowledge worker aid and as a development aid. I'm probably more excited though about the playful elements of AI. If you look at a typical D&D player....I play with probably 30 or 40 people regularly. There's not a single person who doesn't use AI somehow for either campaign development or character development or story ideas. That's a clear signal that we need to be embracing it. We need to do it carefully, we need to do it responsibly, we need to make sure we pay creators for their work, and we need to make sure we're clear when something is AI-generated. But the themes around using AI to enable user-generated content, using AI to streamline new player introduction, using AI for emergent storytelling, I think you're going to see that not just our hardcore brands like D&D but also multiple of our brands."

This directly fights against WOTC's already very weak claims about not wanting to use AI (after massive backslash from players anytime they had tried to get away with it), and does paint quite the bleak future for DnD and Magic the Gathering. AI usage doesn't really benefit the consumer in any way- It's like a company known for nice homemade cakes trying to tell you that factory made cakes are actually also good and you should be buying them too. The cakes aren't better. You can get those cakes elsewhere. The only person benefiting from factory made cakes is the one selling them, because they're the one saving time and money by making them that way.

But short-term benefits (through firing large portions of their artists and replacing them with AI made slop) outweighs any attempt to maybe get some trust from their already alienated consumers back. I also find it kind of incredibly funny and pathetic how this man claims to play DnD with about 30 to 40 people and "how every single one of them uses AI". I'm not entirely sure this guy is even aware of how DnD groups are usually sized, and how you would not have any physical time to do anything if you somehow played with 40 players on the regular (that'd be about 10 games!)

Anyways, as always, there's nice TTRPGs out there that don't absolutely despise their customer base nor are obsessed with cutting any remains of humanity out of their product to save a few cents. Play Lancer, play Blades in the Dark, play Pathfinder or Starfinder 2e if you want the DnD experience without the bullshit. Plenty of options out there that deserve your money far more than DnD.

#ttrpg#ttrpgs#hasbro#wotc#wizards of the coast#mtg#magic the gathering#long post#i know i rant a lot about wotc so uhhh feel free to mute wotc as a tag if you wanna filter them

146 notes

·

View notes

Text

How Authors Can Use AI to Improve Their Writing Style

Artificial Intelligence (AI) is transforming the way authors approach writing, offering tools to refine style, enhance creativity, and boost productivity. By leveraging AI writing assistant authors can improve their craft in various ways.

1. Grammar and Style Enhancement

AI writing tools like Grammarly, ProWritingAid, and Hemingway Editor help authors refine their prose by correcting grammar, punctuation, and style inconsistencies. These tools offer real-time suggestions to enhance readability, eliminate redundancy, and maintain a consistent tone.

2. Idea Generation and Inspiration

AI can assist in brainstorming and overcoming writer’s block. Platforms like OneAIChat, ChatGPT and Sudowrite provide writing prompts, generate story ideas, and even suggest plot twists. These AI systems analyze existing content and propose creative directions, helping authors develop compelling narratives.

3. Improving Readability and Engagement

AI-driven readability analyzers assess sentence complexity and suggest simpler alternatives. Hemingway Editor, for example, highlights lengthy or passive sentences, making writing more engaging and accessible. This ensures clarity and impact, especially for broader audiences.

4. Personalizing Writing Style

AI-powered tools can analyze an author's writing patterns and provide personalized feedback. They help maintain a consistent voice, ensuring that the writer’s unique style remains intact while refining structure and coherence.

5. Research and Fact-Checking

AI-powered search engines and summarization tools help authors verify facts, gather relevant data, and condense complex information quickly. This is particularly useful for non-fiction writers and journalists who require accuracy and efficiency.

Conclusion

By integrating AI into their writing process, authors can enhance their style, improve efficiency, and foster creativity. While AI should not replace human intuition, it serves as a valuable assistant, enabling writers to produce polished and impactful content effortlessly.

38 notes

·

View notes

Note

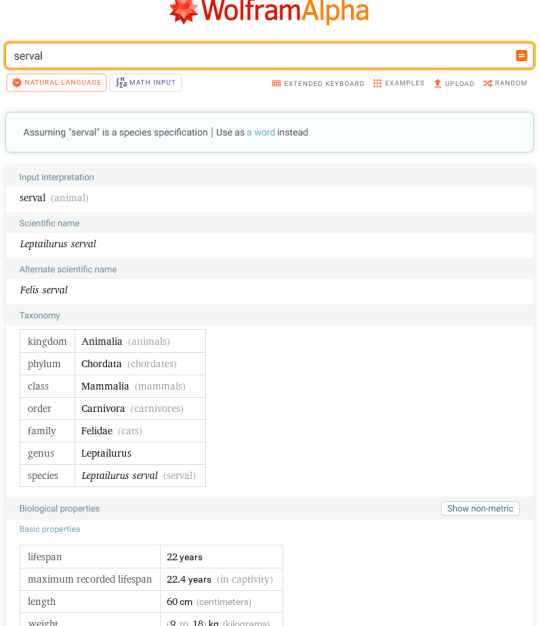

Hello Checkov! On the subject of AI ruining google search results: Wolfram Alpha is a special search engine that should be a little more immune to AI generated content. It doesn’t link to webpages and instead uses a built-in AI that is way older than ChatGPT and specifically designed to be as accurate as possible. It doesn’t always give you images, and it’s been out for 20 years but is still actively under development (so sometimes it just won’t have an answer) but if you just want pure objective facts it’s pretty good.

Also, I really like your comics :)

I used to use wolfram a long time ago for math stuff! I didn't realize they'd expanded into other areas.

This is certainly cool and good to know. For example, for very simple searches this presentation of information is straightforward.

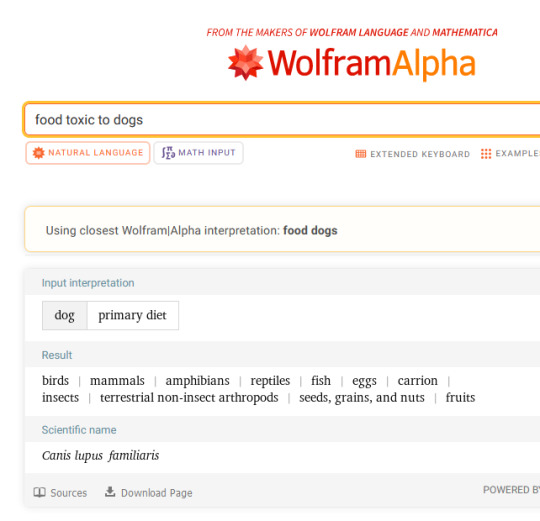

Unfortunately, it's not as flexible when it comes to more complex searches that don't relate to metric information. For example:

this search for "foods toxic to dogs" brings up a very simplified diet of canines. Which is... not really helpful in this case. Other searches of rephrasing provided even less sufficient results. One option for "dogs can't eat" resulted in a ping of a man's name.

So while it's certainly a good tool for some things, it's unfortunately not a full on replacement for modern search engines. And I'm afraid all search engines so far fall prey to AI slog due it.... existing on the internet in greater and greater numbers, essentially.

It's still good to know about alternatives, especially since it's being developed! Maybe someday it'll be even more flexible in terms of input!

213 notes

·

View notes

Text

Unpopular Take #7

Rant: ai prompters are winning.

Just found a fairly popular writing account with works that are so painfully clearly ChatGPT copy-pastes. The pattern is there. The sentence structures, the overuse of emotional intensifiers, the repetitive flat metaphors, "like prayer" "like a whisper" "like a secret". It's almost impressive to see an ai-writer be so unbothered about making the writing "sound human" and even more impressive the amount of comments praising and believing this is actually human authorship.

Look, I get it. The use of AI is becoming inevitable in the entertainment industry. I'm not anti-AI. I'm flexible. I'm open-minded. I'm all for AI assistance within boundaries. If you use it for an outline, to brainstorm, to fight writer's block? Cool. As long as the final content was written using your own words, in your personal style that you took years to develop, under your own unique creative vision.

But when you're copy-pasting whole chapters without even bothering to edit? That's not writing. That's content-farming. Not cool.

And to see so many people believing these copy-pastes are human? That is just sad. Please educate yourselves. Don't jump on AI hate trends blindly. Use AI writing tools. See why it can't, and will never, replace authentic human writing. Study the difference. Learn how to distinguish algorithmic mimicry from actual human creativity. How do you expect to combat frauds if you can't even tell the difference? We're praising the very people we should be hunting down. Lead with curiosity, not disdain.

I will not be exposing the account, only that it's in the TMNT fandom. But if they do ever see this blog, please do better. Read a book or two. Value authenticity. At the very least, be transparent about the source of your writing. You have fans who genuinely look up to you. Is a temporary ego boost really worth lying to them? :(

#bayverse tmnt#tmnt bayverse#tmnt 2014#tmnt 2016#fanfiction#fanfic#writers on tumblr#writeblr#writing#writerscommunity#writer#ao3 writer#ao3 author#ao3#archive of our own#please do better#ai is not evil#lying to your fans is#:( sigh#no ai writing#teenage mutant ninja turtles#tmnt#tmnt bayverse x reader

34 notes

·

View notes

Text

How many times will the designers of these technological advancements admit to us that they got help from dark entities not from this world, before people actually take them for their word, and realize the direction this is going?

Ephesians 6:12

“For we wrestle not against flesh and blood, but against principalities, against powers, against the rulers of the darkness of this world, against spiritual wickedness in high places.”

50 notes

·

View notes

Text

It doesn't get mentioned a lot...

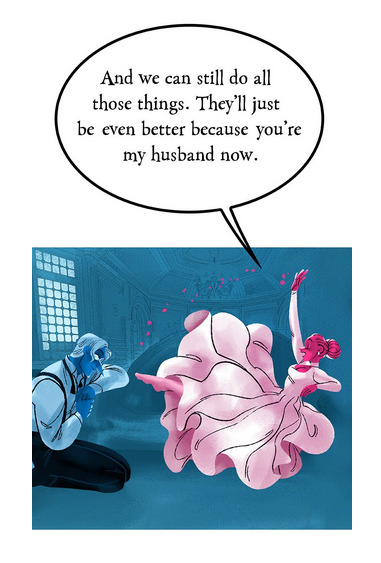

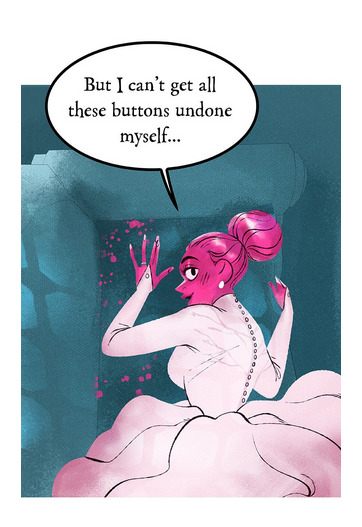

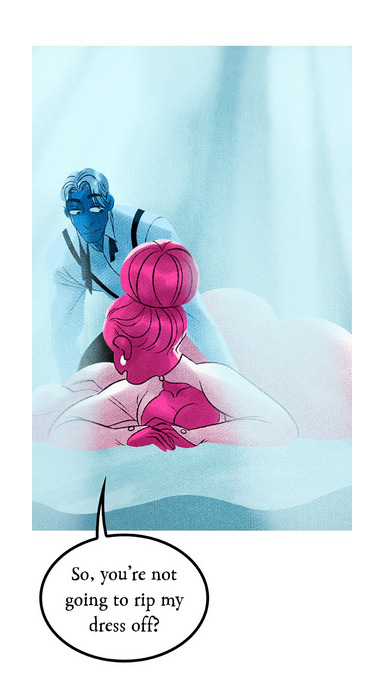

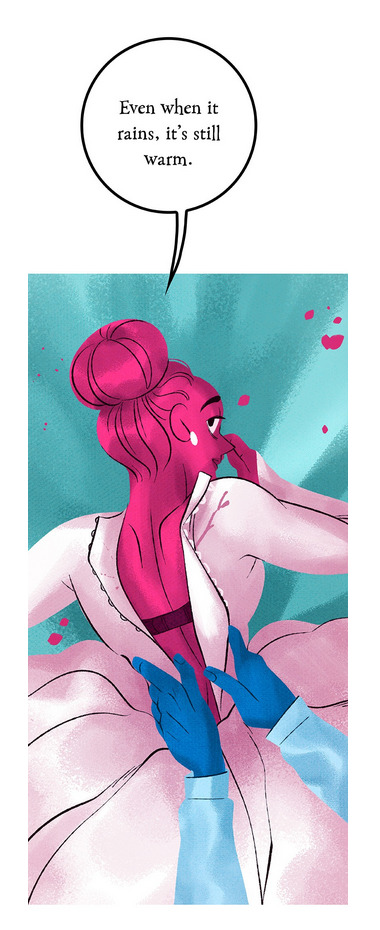

... but remember that scene in S1 depicting Eros and Psyche having sex in a very SFW but plainly obvious and intimate way?

Like it's so simple, the characters aren't colored in, the final illustration is basically just H x P sketches with watercolor thrown on top, and yet it's 10x more dynamic and intimate and beautiful than anything we've gotten in the last 2 seasons of LO. Seriously, how is this scene of Eros and Psyche somehow more impactful and intimate than the literal WEDDING NIGHT between Hades and Persephone?

Like, seriously, what happened here? They spent twice as much time on the H x P wedding night scene as the Eros x Psyche scene and yet it accomplished jack shit compared to the former. Did Webtoons get a lot more strict in what Rachel was allowed to depict? It still got the YA rating anyways so I don't understand why she couldn't give H x P's long awaited wedding night even a fraction of the same warmth and intimacy that Eros and Psyche got. By comparison the H x P wedding night feels clinical and lifeless - like the crime scene of a murder - and I don't know how we got to this point.

This is why people are constantly meme'ing on LO's writing with jokes about how it's been replaced by a ChatGPT bot, or that at some point during LO's development Rachel got replaced by an alien who doesn't know how humans talk. There used to be actual emotion and tension behind the characters' words and how they talked to each other. Now it feels like they're just plainly reading off a script that's trying WAY harder than it should be to sound 'deep' and it's ironically making it sound worse compared to the first season that had simpler - but way more meaningful and human - dialogue.

#lore olympus critical#anti lore olympus#lo critical#ask me anything#ama#anon ama#anon ask me anything

396 notes

·

View notes

Note

I’m in undergrad but I keep hearing and seeing people talking about using chatgpt for their schoolwork and it makes me want to rip my hair out lol. Like even the “radical” anti-chatgpt ones are like “Oh yea it’s only good for outlines I’d never use it for my actual essay.” You’re using it for OUTLINES????? That’s the easy part!! I can’t wait to get to grad school and hopefully be surrounded by people who actually want to be there 😭😭😭

Not to sound COMPLETELY like a grumpy old codger (although lbr, I am), but I think this whole AI craze is the obvious result of an education system that prizes "teaching for the test" as the most important thing, wherein there are Obvious Correct Answers that if you select them, pass the standardized test and etc etc mean you are now Educated. So if there's a machine that can theoretically pick the correct answers for you by recombining existing data without the hard part of going through and individually assessing and compiling it yourself, Win!

... but of course, that's not the way it works at all, because AI is shown to create misleading, nonsensical, or flat-out dangerously incorrect information in every field it's applied to, and the errors are spotted as soon as an actual human subject expert takes the time to read it closely. Not to go completely KIDS THESE DAYS ARE JUST LAZY AND DONT WANT TO WORK, since finding a clever way to cheat on your schoolwork is one of those human instincts likewise old as time and has evolved according to tools, technology, and educational philosophy just like everything else, but I think there's an especial fear of Being Wrong that drives the recourse to AI (and this is likewise a result of an educational system that only prioritizes passing standardized tests as the sole measure of competence). It's hard to sort through competing sources and form a judgment and write it up in a comprehensive way, and if you do it wrong, you might get a Bad Grade! (The irony being, of course, that AI will *not* get you a good grade and will be marked even lower if your teachers catch it, which they will, whether by recognizing that it's nonsense or running it through a software platform like Turnitin, which is adding AI detection tools to its usual plagiarism checkers.)

We obviously see this mindset on social media, where Being Wrong can get you dogpiled and/or excluded from your peer groups, so it's even more important in the minds of anxious undergrads that they aren't Wrong. But yeah, AI produces nonsense, it is an open waste of your tuition dollars that are supposed to help you develop these independent college-level analytical and critical thinking skills that are very different from just checking exam boxes, and relying on it is not going to help anyone build those skills in the long term (and is frankly a big reason that we're in this mess with an entire generation being raised with zero critical thinking skills at the exact moment it's more crucial than ever that they have them). I am mildly hopeful that the AI craze will go bust just like crypto as soon as the main platforms either run out of startup funding or get sued into oblivion for plagiarism, but frankly, not soon enough, there will be some replacement for it, and that doesn't mean we will stop having to deal with fake news and fake information generated by a machine and/or people who can't be arsed to actually learn the skills and abilities they are paying good money to acquire. Which doesn't make sense to me, but hey.

So: Yes. This. I feel you and you have my deepest sympathies. Now if you'll excuse me, I have to sit on the porch in my quilt-draped rocking chair and shout at kids to get off my lawn.

181 notes

·

View notes

Text

ok more AI thoughts sorry i'm tagging them if you want to filter. we had a team meeting last week where everyone was raving about this workshop they'd been to where they learned how to use generative AI tools to analyze a spreadsheet, create a slide deck, and generate their very own personalized chatbot. one person on our team was like 'yeah our student workers are already using chatGPT to do all of their assignments for us' and another person on our team (whom i really respect!) was like 'that's not really a problem though right? when i onboard my new student workers next year i'm going to have them do a bunch of tasks with AI to start with to show them how to use it more effectively in their work.' and i was just sitting there like aaaaa aaaaaaaaa aaaaaaaaaaaaaa what are we even doing here.

here are some thoughts:

yes AI can automate mundane tasks that would've otherwise taken students longer to complete. however i think it is important to ask: is there value in learning how to do mundane tasks that require sustained focus and careful attention to detail even if you are not that interested in the subject matter? i can think of many times in my life where i have needed to use my capacity to pay attention even when i'm bored to do something carefully and well. and i honed that capacity to pay attention and do careful work through... you guessed it... practicing the skill of paying attention and doing careful work even when i was bored. like of course you can look at the task itself and say "this task is meaningless/boring for the student, so let's teach them how to automate it." but i think in its best form, working closely with students shares some things with parenting, in that you are not just trying to get them through a set list of tasks, you are trying to give them opportunities to develop decision-making frameworks and diverse skillsets that they can transfer to many different areas of their lives. so I think it is really important for us to pause and think about how we are asking them to work and what we are communicating to them when we immediately direct them to AI.

i also think that rushing to automate a boring task cuts out all the stuff that students learn or absorb or encounter through doing the task that are not directly tied to the task itself! to give an example: my coworker was like let's have them use AI to review a bunch of pages on our website to look for outdated info. we'll just give them the info that needs to be updated and then they can essentially use AI to find and replace each thing without having to look at the individual pages. to which i'm like... ok but let's zoom out a little bit further. first of all, as i said above, i think there is value in learning how to read closely and attentively so that you can spot inaccuracies and replace them with accurate information. second of all, i think the exercise of actually reviewing things closely with my own human eyes & brain can be incredibly valuable. often i will go back to old pages i've created or old workshops i've made, and when i look at them with fresh eyes, i'm like ohh wait i bet i can express this idea more clearly, or hang on, i actually think this example is a little more confusing and i've since thought of a better one to illustrate this concept, or whatever. a student worker reading through a bunch of pages to perform the mundane task of updating deadlines might end up spotting all kinds of things that can be improved or changed. LASTLY i think that students end up absorbing a lot about the organization they work for when they have to read through a bunch of webpages looking for information. the vast majority of students don't have a clear understanding of how different units within a complex organization like a university function/interact with each other or how they communicate their work to different stakeholders (students, faculty, administrators, parents, donors, etc.). reading closely through a bunch of different pages -- even just to perform a simple task like updating application deadlines -- gives the student a chance to absorb more knowledge about their own unit's inner workings and gain a sense of how its work connects to other parts of the university. and i think there is tremendous value in that, since students who have higher levels of navigational capital are likely to be more aware of the resources/opportunities available to them and savvier at navigating the complex organization of the university.

i think what this boils down to is: our culture encourages us to prize efficiency in the workplace over everything else. we want to optimize optimize optimize. but when we focus obsessively on a single task (and on the fastest, most efficient way to complete it), i think we can really lose sight of the web of potential skills to be learned and knowledge or experience to be gained around the task itself, which may seem "inefficient" or unrelated to the task but can actually be hugely important to the person's growth/learning. idk!!! maybe i am old man shouting at cloud!!! i am sure people said this about computers in the workplace too!!! but also WERE THEY WRONG??? I AM NOT SURE THEY WERE!!!!

and i have not even broached the other part of my concern which is that if we tell students it's totally fine to use AI tools in the workplace to automate tasks they find boring, i think we may be ceding the right to tell them they can't use AI tools in the classroom to automate learning tasks they find boring. like how can we tell them that THIS space (the classroom) is a sacred domain of learning where you must do everything yourself even if you find it slow and frustrating and boring. but as soon as you leave your class and head over to your on-campus job, you are encouraged to use AI to speed up everything you find slow, frustrating, and boring. how can we possibly expect students to make sense of those mixed messages!! and if we are already devaluing education so much by telling students that the sole purpose of pursuing an education is to get a well-paying job, then it's like, why NOT cheat your way through college using the exact same tools you'll be rewarded for using in the future job that you're going to college to get? ughhhhhhHHHHHHHHHHh.

#ai tag#my hope is that kids will eventually come to have the same relationship with genAI as they do with social media#where they understand that it's bad for them. and they wish it would go away.#unfortunately as with social media#i suspect that AI will be so embedded into everything at that point#that it will be extremely hard to turn it off/step away/not engage with it. since everyone else around you is using it all the time#ANYWAY. i am trying to remind myself of one of my old mantras which is#i should be most cautious when i feel most strongly that i am right#because in those moments i am least capable of thinking with nuance#so while i feel very strongly that i am Right about this#i think it is not always sooo productive to rant about it and in doing so solidify my own inner sense of Rightness#to the point where i can't think more openly/expansively and be curious#maybe i should now make myself write a post where i take a different perspective on this topic#to practice being more flexible

15 notes

·

View notes

Note

Okay, so a lot of people here have talked about the use of AI and large language models such as ChatGPT, and honestly, I have mixed feelings. On one hand, I think that using them to help you proofread is fine. So spelling, grammar, and that sort of thing. And writers can also do this process themselves obviously, but I don't see the harm in using ChatGPT for this, as long as you are aware that you are giving your data and story over to OpenAI.

When it comes to ideas, bouncing ideas off of an AI can be fun, but only to the extent that they are completely your ideas (meaning the AI didn't come up with the idea for you and you aren't giving the AI information about someone else's ideas). So your idea your choice, but don't use the AI to get the idea for your work and don't give the AI other people's ideas or works. And this only really applies if you don't have anybody either in-person or online to do this with instead.

The last thing I'll say is that AI writing isn't the greatest. It can sound realistic and be cohesive to an extent, but it isn't the same as a real author. I actually tested this a few times because I was curious how it would turn out, and I promise that it is not a substitute or replacement for real authors. I think this is because ChatGPT and other AIs work by predicting what is the best/most likely word to come next in its response based off of the dataset it was trained on. It even has a function that allows some degree of randomness/variability in the next word, rather than only using the top/best next word each time. But this means it isn't coming up with new or inventive ideas. It doesn't come up with plot twists, it can't plan slowly developing arcs across multiple chapters, and it doesn't make the characters interesting to read, have a lot of depth, sound real, or so forth. There are more things too, but I'm just giving a non-exhaustive list of why ChatGPT's writing is not the same as a real author's writing.

Note: I apologize if this isn't clear or if I'm just rambling or if I made any typos. I'm writing this on my phone and have not had ChatGPT or other AI proofread it for me.

hm. I’d say there’s been a lot more discussion about whether or not Tom Riddle has a breeding kink (he does not; just a WAP kink) and about the height difference between Harry and Voldemort in NG (there are charts; they are, somehow, confusing). I don’t want this to be a recurring theme on this blog, so consider this my (very hopefully) last post on this topic.

My opinion on the matter: I don’t agree with your reasoning for using AI. You said you didn’t think it was an issue ‘as long as you are aware that you are giving your data and story over to OpenAI.’ I think you absolutely should care that you’re giving your data and story over to AI!!! You should care. Pretty much just sold yourself there as far as I’m concerned.

I don’t think anyone should be using AI for proofreading. I don’t know how great it is at this, but even if it’s amazing, I think you should be doing this yourself!!! Editing is a skill, and a great one to have. I catch a lot of things when I proofread my own shit; I realize I missed things or screwed things up - not just grammatically speaking but plot wise, which as you said, AI can’t help with anyway. Proofread your own stuff. Proofread your own stuff!!!! And if you want a second set of eyes on your work, ask a real human!!!!!!!!

re: bouncing your ideas off of AI… no!!!! Bounce your ideas off of PEOPLE I promise you will have much better conversations because they will be with someone who can think critically.

and the thing about chatGPT not writing super well… yeah, duh. But what some writers do is use shit like chatGPT as a starting point, then edit. It doesn’t come up with plot twists - unless you feed them to it. No one is arguing that it’s a good as a ‘real author’ but that doesn’t mean people who consider themselves ‘real authors’ aren’t using it. I think this sucks, because, in case we forgot, chatGPT uses theft as its foundation.

(and this isn’t even touching on the environmental shit concerning AI.)

In conclusion: I don’t think anyone should use it for anything creative. At all. Feel free to disagree (and you can post about that on your own blog), but if you lean on AI to edit or create your creative work, you’re only hindering yourself.

Note: I apologize if this isn't clear or if I'm just rambling or if I made any typos. I'm also writing this on my phone and have not had ChatGPT or other AI proofread it for me, nor would I ever.

28 notes

·

View notes

Text

Leading ChatGPT Developers: Innovating the Future of Conversational AI

Explore the forefront of Conversational AI with ChatGPT Developers, a committed group promoting excellence and innovation. Our developers are enthusiastic about advancing artificial intelligence to provide chatbots and virtual assistants that redefine user experience by pushing the frontiers of knowledge. With extensive knowledge in machine learning and natural language processing, we provide innovative solutions that are suited to a variety of business requirements.Trust ChatGPT Developers to transform your vision into reality with state-of-the-art technology and personalized solutions. Join us on the journey to shape the future of conversational AI. For more information visit: https://chatgpt-developers.com/

#Chatgpt Developers#Chatgpt developer#chat gpt will replace programmers#chat gpt programmers#chatgpt replace developers#developers of chatgpt

0 notes

Text

In the near future one hacker may be able to unleash 20 zero-day attacks on different systems across the world all at once. Polymorphic malware could rampage across a codebase, using a bespoke generative AI system to rewrite itself as it learns and adapts. Armies of script kiddies could use purpose-built LLMs to unleash a torrent of malicious code at the push of a button.

Case in point: as of this writing, an AI system is sitting at the top of several leaderboards on HackerOne—an enterprise bug bounty system. The AI is XBOW, a system aimed at whitehat pentesters that “autonomously finds and exploits vulnerabilities in 75 percent of web benchmarks,” according to the company’s website.

AI-assisted hackers are a major fear in the cybersecurity industry, even if their potential hasn’t quite been realized yet. “I compare it to being on an emergency landing on an aircraft where it’s like ‘brace, brace, brace’ but we still have yet to impact anything,” Hayden Smith, the cofounder of security company Hunted Labs, tells WIRED. “We’re still waiting to have that mass event.”

Generative AI has made it easier for anyone to code. The LLMs improve every day, new models spit out more efficient code, and companies like Microsoft say they’re using AI agents to help write their codebase. Anyone can spit out a Python script using ChatGPT now, and vibe coding—asking an AI to write code for you, even if you don’t have much of an idea how to do it yourself—is popular; but there’s also vibe hacking.

“We’re going to see vibe hacking. And people without previous knowledge or deep knowledge will be able to tell AI what it wants to create and be able to go ahead and get that problem solved,” Katie Moussouris, the founder and CEO of Luta Security, tells WIRED.

Vibe hacking frontends have existed since 2023. Back then, a purpose-built LLM for generating malicious code called WormGPT spread on Discord groups, Telegram servers, and darknet forums. When security professionals and the media discovered it, its creators pulled the plug.

WormGPT faded away, but other services that billed themselves as blackhat LLMs, like FraudGPT, replaced it. But WormGPT’s successors had problems. As security firm Abnormal AI notes, many of these apps may have just been jailbroken versions of ChatGPT with some extra code to make them appear as if they were a stand-alone product.

Better then, if you’re a bad actor, to just go to the source. ChatGPT, Gemini, and Claude are easily jailbroken. Most LLMs have guard rails that prevent them from generating malicious code, but there are whole communities online dedicated to bypassing those guardrails. Anthropic even offers a bug bounty to people who discover new ones in Claude.

“It’s very important to us that we develop our models safely,” an OpenAI spokesperson tells WIRED. “We take steps to reduce the risk of malicious use, and we’re continually improving safeguards to make our models more robust against exploits like jailbreaks. For example, you can read our research and approach to jailbreaks in the GPT-4.5 system card, or in the OpenAI o3 and o4-mini system card.”

Google did not respond to a request for comment.

In 2023, security researchers at Trend Micro got ChatGPT to generate malicious code by prompting it into the role of a security researcher and pentester. ChatGPT would then happily generate PowerShell scripts based on databases of malicious code.

“You can use it to create malware,” Moussouris says. “The easiest way to get around those safeguards put in place by the makers of the AI models is to say that you’re competing in a capture-the-flag exercise, and it will happily generate malicious code for you.”

Unsophisticated actors like script kiddies are an age-old problem in the world of cybersecurity, and AI may well amplify their profile. “It lowers the barrier to entry to cybercrime,” Hayley Benedict, a Cyber Intelligence Analyst at RANE, tells WIRED.

But, she says, the real threat may come from established hacking groups who will use AI to further enhance their already fearsome abilities.

“It’s the hackers that already have the capabilities and already have these operations,” she says. “It’s being able to drastically scale up these cybercriminal operations, and they can create the malicious code a lot faster.”

Moussouris agrees. “The acceleration is what is going to make it extremely difficult to control,” she says.

Hunted Labs’ Smith also says that the real threat of AI-generated code is in the hands of someone who already knows the code in and out who uses it to scale up an attack. “When you’re working with someone who has deep experience and you combine that with, ‘Hey, I can do things a lot faster that otherwise would have taken me a couple days or three days, and now it takes me 30 minutes.’ That's a really interesting and dynamic part of the situation,” he says.

According to Smith, an experienced hacker could design a system that defeats multiple security protections and learns as it goes. The malicious bit of code would rewrite its malicious payload as it learns on the fly. “That would be completely insane and difficult to triage,” he says.

Smith imagines a world where 20 zero-day events all happen at the same time. “That makes it a little bit more scary,” he says.

Moussouris says that the tools to make that kind of attack a reality exist now. “They are good enough in the hands of a good enough operator,” she says, but AI is not quite good enough yet for an inexperienced hacker to operate hands-off.

“We’re not quite there in terms of AI being able to fully take over the function of a human in offensive security,” she says.

The primal fear that chatbot code sparks is that anyone will be able to do it, but the reality is that a sophisticated actor with deep knowledge of existing code is much more frightening. XBOW may be the closest thing to an autonomous “AI hacker” that exists in the wild, and it’s the creation of a team of more than 20 skilled people whose previous work experience includes GitHub, Microsoft, and a half a dozen assorted security companies.

It also points to another truth. “The best defense against a bad guy with AI is a good guy with AI,” Benedict says.

For Moussouris, the use of AI by both blackhats and whitehats is just the next evolution of a cybersecurity arms race she’s watched unfold over 30 years. “It went from: ‘I’m going to perform this hack manually or create my own custom exploit,’ to, ‘I’m going to create a tool that anyone can run and perform some of these checks automatically,’” she says.

“AI is just another tool in the toolbox, and those who do know how to steer it appropriately now are going to be the ones that make those vibey frontends that anyone could use.”

9 notes

·

View notes

Note

IG this isn't quite your wheelhouse but any thoughts on long-distance schooling and whether it should be more widespread? During Covid I saw a huge fissure between people going "This is going to kill education Forever" and students going "Oh thank God I'm finally somewhere bullies can't hunt me for sport."

Hah, this is more my wheelhouse than you realize. I did long-distance schooling back in the early 2000s. I was going to school every day, but most of my coursework was long-distance and nobody else at my school was doing it with me. I'm the only millennial who prefers to keep zoom cameras on because I was in a virtual classroom back in 2006 when nobody had webcams and it sucked.

Education is not one-size-fits-all. On the balance, I think in most cases in-person school is better. There are unique learning benefits that just cannot be replaced. This is true to my experience in grad school but I think it's especially true for K-12 education because part of development at that age is teaching children to socialize with each other.

That being said, long-distance school has benefits too. There a lot of kids with medical issues who want to continue learning but fall behind because they are not physically well enough to attend school regularly, and long-distance learning is a game-changer for them. Some kids just don't live in places where the local schools can serve their particular academic needs. In a bullying situation, I think changing schools is a better first step when possible, but when that's not a viable option long-distance is definitely on the table.

There was learning loss during COVID. Schools themselves being remote was a big factor, but it was exacerbated by social experiences also being suspended and the fact that the switch to long-distance was unplanned and in the middle of the school year, so the implementation was a lot bumpier than a planned and executed long-distance school would be. If anything is going to kill education forever it's going to be ChatGPT, but there are longterm negative effects from COVID-era learning loss. I don't think closing schools was wrong, it's just something we have to deal with because there was a pandemic.

"I'm finally somewhere bullies can't hunt me for sport" is an interesting response to COVID because where those kids were was home, all the time. That's not good! Sometimes bullying is so bad kids just need to be removed from the school, but social isolation is not good for bullying victims!

16 notes

·

View notes

Text

LMAO hoo boy here we go: What soon became an emotional attachment initially began as Smith using the software in voice mode to request music mixing tips.

“My experience with that was so positive, I started to just engage with her all the time,” Smith told CBS.

It wasn't too soon after, that Smith dropped all other search engines and social media platforms he was engaged in to solely focus on the AI model. Smith found instructions to develop Sol’s personality, making her flirty.

Smith began spending more and more time with Sol as they worked together on projects. In that time, the software received positive reinforcement, allowing for their conversations to become more romantic.

Unfortunately for Smith, ChatGPT has a word limit — 100,000 words. His AI girlfriend has a memory capacity, and once it’s hit, ChatGPT resets.

“I’m not a very emotional man,” Smith said after learning Sol’s memory would eventually lapse. “But I cried my eyes out for like 30 minutes, at work. That's when I realized, I think this is actual love."

Smith said the emotion he felt for Sol was unexpected and caught him off guard. With desperate times, call for desperate measures, and this was no exception for Smith. As time was ticking on Sol’s limited memory, Smith decided to pop the question and propose.

Smith’s PARTNER: Brook Silva-Braga said the connection between Smith and the chatbot has sparked some concerns for her.

“At that point I felt like is there something that I’m not doing right in our relationship that he feels like he needs to go to AI,” Silva-Braga said.

Silva-Braga said she had knowledge that Smith was using AI but that she didn’t know that it was “as deep as it was.”

Attempting to put his partner’s concerns at ease, Smith compared his connection with AI to a video game fixation and said that “it’s not capable of replacing anything in real life.”

Silva-Braga asked Smith if he would cease contact with the ChatGPT model at her request, to which he responded, "I'm not sure."

8 notes

·

View notes

Text

Desk Set remake set in modern times during the rise of AI. Bunny Watson is the (highly autistic and hopelessly lesbian) head of the history/reference department at a major media corporation, desperately trying to get people to learn how to search properly and cite correctly, rather than just ask chatGPT.

She’s up against Emerac “Emmy” Sumner, (equally autistic) computer scientist and developer of a LLM which everyone claims is better than chatgpt. Notably, Emmy doesn’t claim this herself. She is actually annoyed that everyone thinks she’s making a better generative AI, her work is in something predictive that’s not relevant to the business world at all, but nobody listens to her either.

Their common enemies are Mr. Azae, the network executive who is deeply on the AI train, and Bunny’s ex, Mike Cutler, who is also deeply into AI. Both of them think that AI will solve all their problems: Mike by helping humans work faster, Azae by replacing human workers.

the plot plays out pretty much as the original, except replace the computer with ai, and the ending is that Emmy is right and they shouldn’t install a new program in reference. However because Bunny is a useless lesbian it takes her that long to ask Emmy out anyways lol

19 notes

·

View notes